"Search Engine Journal" - 9 new articles

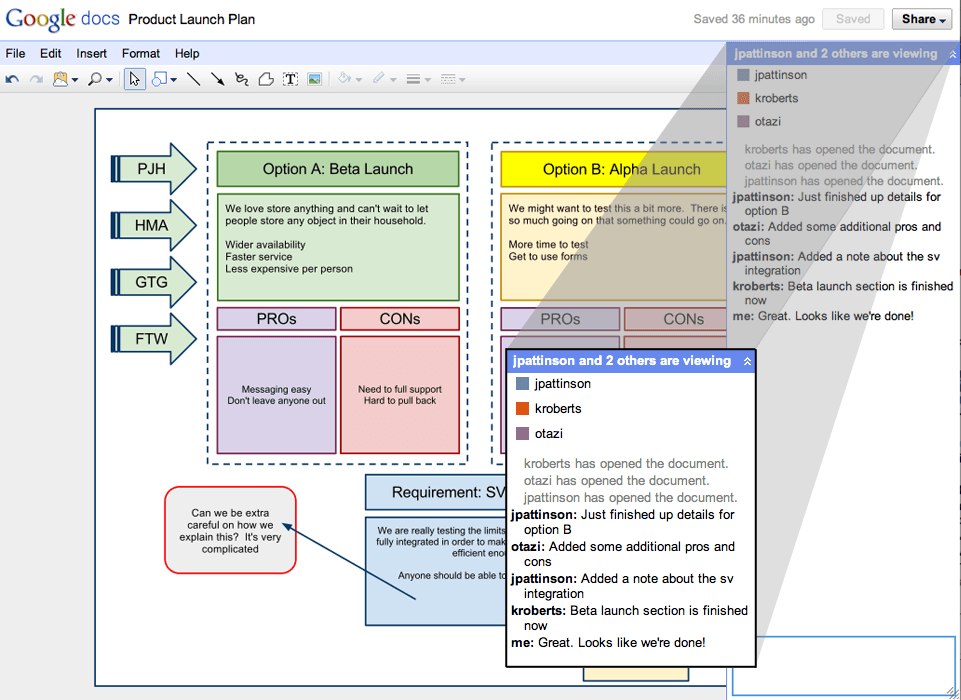

Google Rolls Out New Docs FeaturesGoogle is gradually rolling out some enhancements of its online collaboration tool – Google Docs – specifically docs, spreadsheets and a new stand-alone drawings tool. Google has rebuild the Docs infrastructure to give the online tools more flexibility, improved performance and better platform for them to develop new features in the future. The new versions of Google document, spreadsheets editor and drawings editor were all built with an even greater focus on speed and collaboration. In brief here’s what these new features will allow you to do: Google Docs document editor – made more responsive with the same real-time editing feature of Google spreadsheets. It now displays character-by-character changes as yo make your edits. In addition, Google Docs editor also now features the sidebar chat that allows you to collaborate on documents with your colleagues as you make your edits. Also features improve document formatting with better import/export fidelity, enhanced comment systems, real margins and tab stops and enhanced document layout. Google Docs Spreadsheet Editor – Now loads even faster, more responsive and scroll more seamlessly. Other new features include – formula bar for cell editing, auto-complete, drag and drop columns, and simpler navigation between sheets. Google Docs Drawing Editor – new standalone drawing editor allows you to collaborate in real time when working on flow charts, designs, diagrams and other fun or business graphics. It also allows you to share your work easily or append it to documents, spreadsheets or presentations using web clipboard. Overall, some nice new features that aim to make Google Docs a formidable force some more in the growing cloud computing niche. These new features are gradually being rolled out. To try them out simply click on “New version” at the top of any spreadsheet or go to the “Document Settings” page and select “New version of Google documents.” Check out the SEO Tools guide at Search Engine Journal. Google Rolls Out New Docs Features Ouch! Google CEO Eric Schmidt Insults BloggersSpeaking at a newspaper conference, Google CEO Eric Schmidt might have spilled something which he should have kept to himself. Or should we give him the benefit of the doubt and just think that he did not mean what he said and was just trying to please the newspaper crowd?Well according to reports, Mr. Schmidt made a rather insulting statement to bloggers that says: There is an art to what you do. And if you’re ever confused as to the value of newspaper editors, look at the blog world. That’s all you need to see.That was surely a painful, trite remark – putting down the billions of bloggers worldwide, right? Incidentally, in case Mr. Schmidt has forgotten already, Google happens to host possibly billions of bloggers running its AdSense business. Google also runs several company blogs being updated by Google employees who can be called “bloggers.” And we here at Search Engine Journal who consider ourselves as one of those “bloggers” have been covering Google News, praising Mr. Schmidt’s company for great new products and services. I don’t think we all deserve that insulting remark. Check out the SEO Tools guide at Search Engine Journal. Ouch! Google CEO Eric Schmidt Insults Bloggers Google CEO Hopes That Apple's iAd Will Help Google with AdMob DealWell it looks like this is the first time that Google was glad that a would-be rival launched its product. According to Reuters, Google CEO Eric Schmidt is hoping that Apple’s mobile ad platform, iAd which was announced last would boosts Google’s argument into convincing government regulators to approve its acquisition of AdMob.U.S. antitrust regulators are arguing that Google’s acquisition of AdMob could potentially affect small-time apps developers who do not really earn that much from their apps but rather from advertisement they sell on their apps. If Google gets AdMob, apps developers are afraid that Google will dominate the mobile advertising market. Mr. Schmidt will possibly argue that Apple’s entry into the mobile advertising space only shows how competitive the market is. And that Google’s acquisition of AdMob will further boost this competitiveness instead of allowing Google to dominate the market. It just seems obvious to me,” said Schmidt. “I hope it (Google’s purchase of AdMob) gets approved.”Check out the SEO Tools guide at Search Engine Journal. Google CEO Hopes That Apple’s iAd Will Help Google with AdMob Deal Paid Internet Search Inventor Applies the Same Model to TwitterFrom the Idealab, the same guys who invented the paid internet search model comes a new startup called Tweetup. TweetUp hopes to make Twitter more relevant for everyone and finally put a stop to the notion that Twitter is all about noise. It’s the fastest and easiest way to find the best tweets and tweeters as well as a reliable way of finding targetted Twitter followers.Basically, what TweetUp does is to boost tweeters on any topic to the top of search results – that is raising these relevant and useful tweets above the usual Twitter noise. TweetUp will also give these important and targetted tweets persistence against the backdrop of millions of tweets posted every minute. To carry out its tasks and services, TweetUp has built an algorithm that assesses the quality, relevance, and influence of tweets and tweeters. It then combines selected tweets with a bid-based marketplace. TweetUp is composed of three parts – the destination site, third party widgets and the advertiser product. The destination site ranks Twitter results by time and via an algorithm to determine which of them should go higher. TweetUp splits revenue from ads 50/50. Potential TweetUp advertisers can enter their bids via impressions, new follower or click through to an end URL. If you want to find ways of promoting your tweets on search engine results, you may want to sign up at TweetUp. They are currently giving away $100 credit towards ads. Check out the SEO Tools guide at Search Engine Journal. Paid Internet Search Inventor Applies the Same Model to Twitter Google Made its First UK Acquisition, Plink Mobile Visual SearchFor the longest time that Google has been into acquisition of startups, interestingly this is the first time that it acquires a UK-based startup. Google just bought mobile visual search startup – Plink. The acquisition is of course part of Google’s aim of acquiring at least one company monthly.Plink, a mobile visual search engine was founded by PhD students Mark Cummins and James Philbin. It’s first product was called PlinkArt, a visual recognition tool that analyzes pictures of well-known art works and paintings and then identifies them. The photos can then be shared with friends and users can purchase by clicking through the information given by Plinkart. According to the developers, Plinkart has been downloaded for more than 50,000 times during the six weeks following its launch. Before you start thinking how Google will integrate Plink to its products and services, well actually it seems like Google will not. The purpose of the acquisition is more of a manpower move, that is Google wants Cummins and Philbin to work on Google Goggles, that cool visual search project introduced by Google previously. Apparently, the Google folks were so impressed by Plink during the Android Develop Challenge when the two developers won $100,000. That prize has been Plink’s source of funding since then. Check out the SEO Tools guide at Search Engine Journal. Google Made its First UK Acquisition, Plink Mobile Visual Search Multilingual SEO: Things to RememberGoogle has recently done a series on the usability of multilingual websites and it got me thinking about multilingual SEO. How do you, in fact, optimize the same website for keywords in multiple languages? But let's start with the core basics. In simple terms, a multilingual website is a website that has content in more than one language. And such website has a lot of on-page stuff that is often done wrong. Let’s take a look at some common issues: 1) Language recognitionOnce Google’s crawler lands on your multilingual website, it starts with determining the main language on every page. Google can recognize a page as being in more than one language but you can avoid crawler confusion by doing the following:

2) URL structureA typical pet peeve of SEO but even more so with multilingual websites. To make the most of your URLs, consider language-specific extensions. Language-specific extensions are often used on multilingual websites to help users (and crawlers) identify the sections of the website they are on and the language the page is in. For example:http://www.website.ca/en/content.html http://www.en.website.ca/content.html http://www.website.ca/fr/content.html http://www.fr.website.ca/content.html This is a great way to organize URLs on a multilingual website because not only does it help the user, but it also makes it easier for the crawler to analyze the indexing of your content. But what if you want to create URLs with characters other than English? Here’s how to do it right:

here’s how it will look properly escaped: http://www.website.ca/fr/cont%C3%A9nt.html

3) Crawling and IndexingAnother common area of focus for SEO. On multilingual websites, follow these recommendations to get more pages crawled:

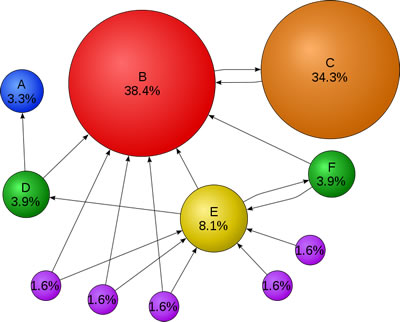

By getting the on-page basics right, you will set a great base for your multilingual SEO in the future and, unlike so many others, you will not have to beg (in multiple languages) SE crawlers to come and index your content. Check out the SEO Tools guide at Search Engine Journal. Multilingual SEO: Things to Remember Beginner's Guide to Link MetricsIn the beginning, search engines were crap.I don’t mean to knock the pioneers, but they simply relied too heavily on what webmasters said their websites were about. That’s why porn sites ranked for searches like, “the whitehouse.” People are shameless – if they can scam their way into money, you’d better believe they’ll do it. Follow the incentives. When Google came onto the scene, touting founder Larry Page’s new PageRank metric, things changed. PageRank was a way to measure websites not by how relevant their webmasters said they were – but by how relevant and authoritative other webmasters said they were. Since then, links have been central to getting sites to rank in search results. It’s nearly impossible to rank without them. PageRank is definitely not a tell-all metric, but one of its core theories still holds true: Not all links are created equal. If you’re getting into the SEO game now you probably already know you need links to rank. And you’ve probably been run through the gamut on how you can build/attract them. This post assumes manual link building (i.e. everything other than linkbait) is at least part of your strategy. Link metrics essentially answer (or attempt to answer) this question: how strong is the page where the link will be published? The stronger the page, the stronger the link it passes. What follows is an introductory guide to metrics we can use to evaluate links. PageRankTo learn the basics of PageRank it’s a good idea to read Larry Page and Sergey Brin’s thesis paper, The Anatomy of a Large-scale Hypertextual Web Search Engine, from their PhD work at Stanford. Yes, it’s academic writing, so you may want to stab your eyes out with a pretzel at some point, but this document formed the basis of one of the biggest technology revolutions in modern history, so buck up.Alright, I know probably 95% of you won’t read the paper – so have a look at this graphic. It gives you the basic idea. (Arrows represent links.)  PageRank is basically a 1-10 score for a page based on how many links it has (and how strong those links are). It’s logorithmic, meaning it’s 10x harder to get from 2 to 3 as it is to get from 1 to 2. It generally follows that the higher the PageRank of a particular page, the more PageRank (or “link juice”) that page can pass to other pages through its links. While most SEOs worth their salt will tell you to ignore PageRank, they still secretly check it when nobody’s looking. How can you collect PageRank data?

mozRank & mozTrust(from SEOmoz)SEOmoz, based in Seattle, moved from their original model of an SEO agency to helping SEOs do their jobs better by providing tools, resources and education. You’ve probably heard of them.In October of 2008 SEOmoz launched something exciting: Linkscape. Linkscape was the culmination of a pretty bold endeavor on the part of SEOmoz and their engineering team: they’d created a link graph of the web. They crawled about a billion URLs and collected link information on each one, storing it in a rather massive index. I was at SMX East in New York City the morning Linkscape was announced, and I can confirm that Rand Fishkin, CEO and founder, was chased through the expo hall aisles by a squadron of screaming SEO geeks. Along with the launch of Linkscape and it’s reporting tools, SEOmoz launched (and continues to expand) an impressive set of tools that use their link index to evaluate links and pages. In order for this data to be actionable, it needed to be organized and evaluated in the same way searches engines handle their own link metrics. Thus mozRank was modeled after PageRank, and mozTrust was modeled after TrustRank (a metric cited in a Yahoo! research paper as a potential means to measure the “trustworthiness” of a given page or website). Essentially, mozTrust measures the flow of trust from core “seed” sites (whitehouse.gov, for example) out to the rest of the web. The idea: the closer a page/site is associated with a trusted seed (via links from that seed), the more mozTrust it has (and, we’re to assume, ranking power). How can you collect mozRank and mozTrust data?

ACRank (Majestic SEO)Majestic SEO actually was on the scene with a competitive link index well before SEOmoz launched Linkscape.In terms of a qualitative metric, Majestic features ACRank, which is, according to their website, a “very simple measure of how important a particular page is…depending on number of unique referring ’short domains’” (a “short domain” is Majestic’s term for what many refer to as a “root domain”). Majestic’s index of the web is reportedly larger than SEOmoz’s by quite a bit. Majestic’s latest estimate was 1.5 trillion URLs indexed – compared with SEOmoz’s “43+ billion.” It’s worth noting, however, that there probably aren’t 1.5 trillion relevant pages on the web. There’s a lot of crap out there, and most of it is totally worthless to search engines and users. For a full comparison of Majestic SEO to SEOmoz’s Linkscape, check out this post from Dixon Jones which provides a pretty thorough analysis. How can you collect data on ACRank?

A Great Big Grain of SaltWhile all of the metrics above are solid places to start when evaluating links, they each come with gotchas.Since the value of links became common knowledge search engines (especially Google) have been fighting the tactics that allow people to “game” their way to the top by building links easily and scalably. In short, Google doesn’t like to look stupid. It hurts their brand (and their feelings). Some link tactics that made Google look stupid (and brought down their wrath):

Any nofollowed link is essentially worthless from an SEO standpoint. It doesn’t pass ranking power. Additionally, Google and those other search engines take manually action to penalize websites for selling links or otherwise being manipulative or violating their webmaser guidelines. Sometimes, the website in question drops significantly in rankings. Sometimes it’s booted entirely from the index. Sometimes its ability to pass value through its links is stripped. The reason you need to know all this when you start evaluating links using the metrics above: these metrics don’t always tell the story. For instance: if a site has had its ability to pass link value stripped, the PageRank may still look solid. But the links themselves pass no value. Sometimes an individual page on a site (a “links” or “resources” page) will be penalized – and its PageRank will be stripped. You can see this by using a toolbar or another means to check the page. One way to check for penalties: compare the mozRank or ACRank of a page to its PageRank. If there’s a great discrepancy, and the mozRank/ACRank is high while the PageRank is very low or zero, this is a good sign the page may have been hit by a Google penalty. The bigger question here is how reliable 3rd party metrics are since they can never be a true representation of a search engine’s link graph. When you throw manual penalties into the mix, the entire graph shifts. One powerful website stripped of its ability to pass link juice then impacts all the sites it links to, their value drops as result, as does the value of the links they pass, and so on. Still, Google isn’t going to open up their true link graph to the public. So we’ve got to take what we can get – but we should be aware of these issues. More reading“Nofollow”To learn more about the “nofollow” attribute and juiceless links check out this excellent guide written by Paul Teitelman over at Search Engine People. Other link metrics This post from Jordan Kasteler at Search & Social explains one of the often-overlooked link metrics: link placement. This post from Big Dave Snyder provides some great tips on evaluating links that aren’t covered in this post. Have your own link metrics you rely on for your link building? Share them in the comments. Check out the SEO Tools guide at Search Engine Journal. Beginner’s Guide to Link Metrics When Promoting Link Bait, Twitter is King!A few weeks back we were promoting a link bait article on digg. Before long, the submission hit the front page but in a matter of minutes was then buried. In the meantime, however, it was quickly picking up steam on Twitter. Within a few hours, the article got picked up by a major online news source, retweeted, and it exploded from there. (and is still going 3 weeks later)As of today, the link bait article that went viral on twitter has 32K backlinks (according to Aaron Wall's SEO toolbar) many from highly trusted websites and news sources. While I don't usually give much credence to pagerank, after the recent PR update, the article's page has a PR 6 while the site's homepage remains a PR 4. This simply speaks to the quality of the links to the article. Because it's a client, I can't link to the site or link bait piece (trust me, I REALLY want to). Instead, what I'll do is give you some insight as to why if part of your social media content strategy is to obtain links, Twitter is king. The goal of link bait First, some background…If you're well versed in the art & science of link bait, you can skip this section. The goal of any link bait campaign is to (you guessed it) attract links. The idea is to create content that evokes an emotion in webmasters that causes them to want to either spread or perhaps talk about your content from their own site (ideally) with a link back. There's a variety of emotions that can accomplish this, and if you want to learn more about the subject, I highly suggest reading Todd Malicoat's article on link bait hooks. The trick to link bait, however, (beyond having FANTASTIC content) is making sure that content gets in front of the right webmasters. This is where social media promotion comes into play. Now, you don't need to go "viral" in order to attract links; sometimes a link bait piece simply targets a small group or niche of webmasters. Still, in order to attract links, you need to have a promotion strategy that successfully places your content piece in front of the right people. Social Bookmarking & News Sites Sites like Digg, Reddit, and Stumble Upon are great for promoting link bait. If you're successful in hitting the front page or becoming popular on one of these services, the potential for a high volume of traffic in a short period of time is difficult to match. This type of success, too, nearly guarantees you'll see links from it. Bloggers and webmasters are generally active in these communities, and tend to use them for inspiration and ideas for their next post or article. While these communities are great, the larger ones reach such a broad audience, that niche content can sometimes go un-noticed or get buried. There are a variety of niche social bookmarking communities, however, that may be a much better use of your time. For example, Tipd.com is a fantastic (albeit small) community for financial news and resources. Networking in these niche communities, too, can form more fruitful relationships, particularly if your content is similar to your new contacts'. You won't see a tremendous amount of links here, but a few good and relevant ones could be worth the effort. Why Twitter is King Social bookmarking sites (like Digg) are great for promoting link bait, and usually shouldn't be overlooked when promoting your content. However, Twitter has some distinct advantages over these services, particularly for attracting links. Here are a few:

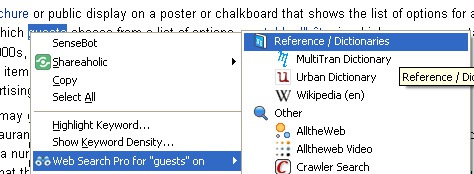

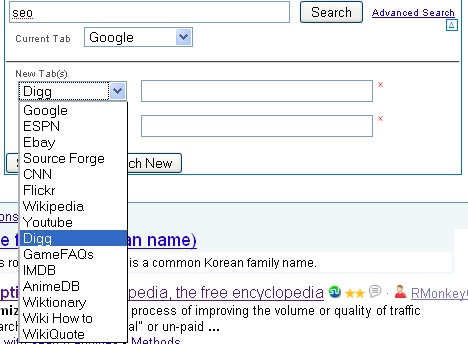

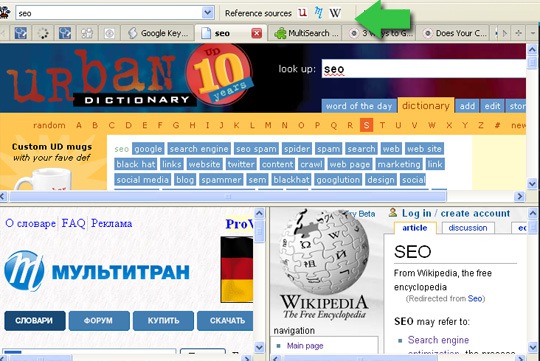

Check out the SEO Tools guide at Search Engine Journal. When Promoting Link Bait, Twitter is King! 3 Ways to Use Multiple Search Engines SimultaneouslyHow much do you search? As you read this blog, you are likely to search quite a lot.This post is aimed to enhance your search productivity by showing you how to search multiple search engines with one click of a mouse! 1. Web Search ProWeb Search Pro is the FireFox addon I reviewed last week. It integrates into your FireFox search box and thus controls your search plugins.One of its most awesome features is the ability to group your search engines by one theme, category or purpose of using and then launch one search for the whole group. For example, you can create a group for your reference sources or a separate group to search all your favorite (SEO) forums, etc:  2. Google Bump (Greasemonkey)Google Bump is a cool Greasemonkey script that has quite a few Google search advances including multi-search.You can search 3 search engines simultaneously (available via the drop-down). Search results will open in new tabs. Available search engines include: Google, Digg, CNN, Flickr, Wikipedia, YouTube, Ebay, etc:  3. SearchbastardSearchbastard allows to group search engines by theme or purpose of using and loads search results in one tab but various frames.The searchbar allows you to quickly perform a search, and quickly repeat that search using another engine. Everything is extremely customizable: You can arrange the frames in a multisearch, you can assign actions to all toolbar events, etc. The plugin is lightweight, and features are dynamically loaded when needed.  3 Ways to Use Multiple Search Engines Simultaneously More Recent Articles |

Click here to safely unsubscribe now from "Search Engine Journal" or change your subscription or subscribe

Unsubscribe from all current and future newsletters powered by FeedBlitz

| Your requested content delivery powered by FeedBlitz, LLC, 9 Thoreau Way, Sudbury, MA 01776, USA. +1.978.776.9498 |